The Problem

OpenAI uses a List of Dictionaries to manage conversations in the ChatGPT API, and it's a nightmare.

This is the code to append the model's last response to your conversation and print it.

# append the bot's response to your conversation

conversation.append({'role': response.choices[0].message.role, 'content': response.choices[0].message.content})

# to print the last message

print(conversation[-1]["content"])

This is ridiculous.

If you try to do anything more advanced, like limiting the conversation history to a certain number of messages, or ensuring the System message is always the most recent message in the conversation, it becomes even more absurd.

The Simple Solution

Since the conversation variable is just a list of dictionaries, and many aspects of those dictionaries are highly predictable. So we can actually abstract away a lot of the mess, without losing any of the inherent ftexibility.

Here is a fully functional chatbot program using Chatterstack:

import chatterstack

import os

os.environ['OPENAI_API_KEY'] = 'your_api_key_here'

convo = chatterstack.Chatterstack()

while True:

convo.user_input()

convo.send_to_bot()

convo.print_last_message()

That's the whole thing! This will run a full, ongoing chatbot program for you, while managing the messages, tracking tokens, and lots more.

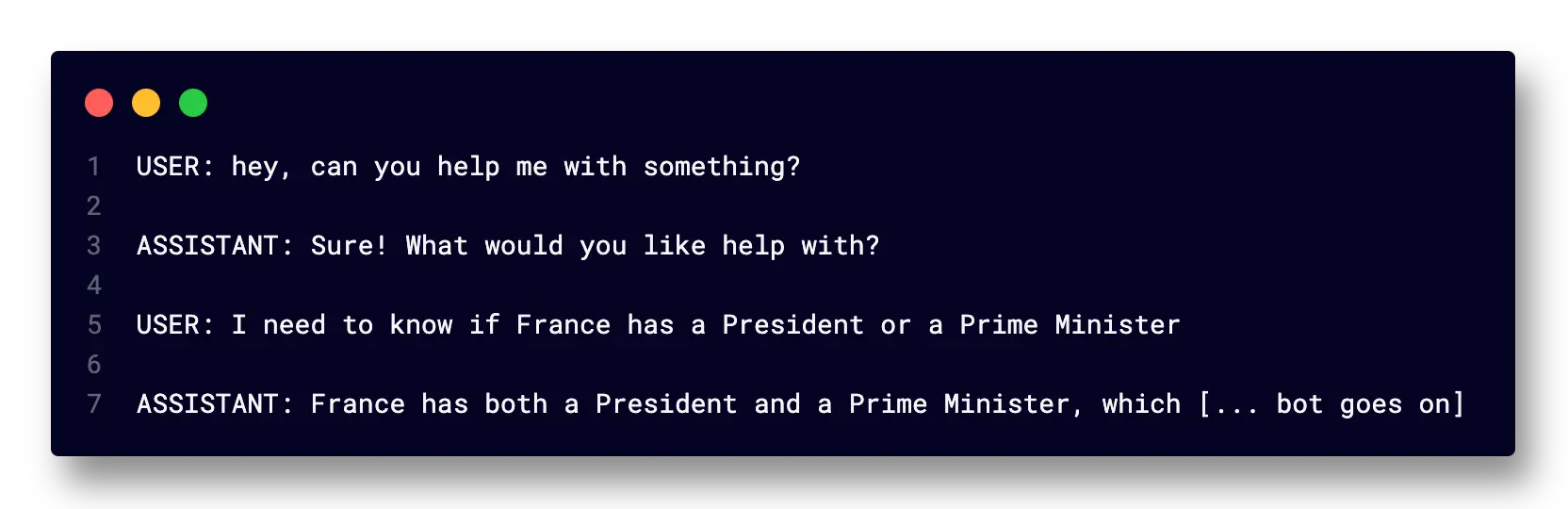

Here's what the conversation with that program would look like in the terminal:

So let's take a look at some of that flexibility we talked about. Here are a couple of the most basic options:

while True:

# Change the user's display name

convo.user_input(prefix="ME: ")

# change any of the API arguments

convo.send_to_bot(model="gpt-4", temperature=1, max_tokens=40)

# change the line spacing of the convo

convo.print_last_message(prefix="GPT: ", lines_before=0, lines_after=2)

# and let's have it print the total tokens after each turn

print(convo.tokens_total_all)

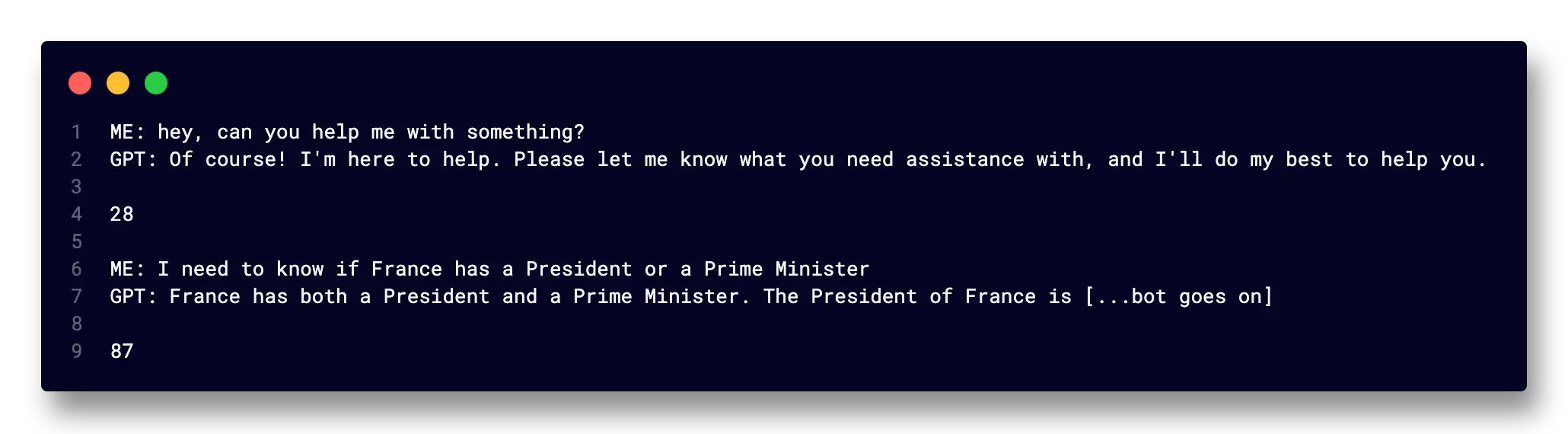

Here's what that conversation would look like in the terminal:

Using Chatterstack

Installation

Chatterstack is available on GitHub and pip:

pip install chatterstack

Getting Input

Theuser_input() method is the same as the python input() method, except it also automatically appends the user's input as a correctly-formatted dict to your conversation variable.

convo.user_input()

As seen above, this method defaults to prompting the user with "USER: ", but you can change it to whatever you'd like

convo.user_input("Ask the bot: ")

Adding Messages

Maybe you aren't using the terminal. Or maybe you want alter the input somehow before appending it. There are several ways to take any string variable and add them to the conversation as a correctly formatted dict:

# Use the .add() method. Pass it the role, then the content

convo.add("user", message_string)

# or, use the role-specific methods & just pass the content

convo.add_user(message_string)

convo.add_assistant("I'm a manually added assistant response")

convo.add_system("SYSTEM INSTRUCTIONS - you are a helpful assistant who responds only in JSON")

There is also .insert() if you want to add a message at a specific index, instead of appending it to the end of the conversation:

# Here's the format

convo.insert(index, role, content)

# example

convo.insert(4, "system", "IMPORTANT: Remember to not apologize to the user so much")

Sending Messages to the API

The chatterstack "send_to_bot" method is a standard OpenAI API call, but it's simpler to use and does a bunch of handy stuff for you in the background. Call it like this:

convo.send_to_bot()

That's it! Chatterstack will take care of passing all the default values for you, as well as appending the response to your conversation. It also keeps token counts for you (and in the advanced class, much more).

Changing the default API parameters:

By default, chatterstack uses these values:

model="gpt-3.5-turbo",

temperature=0.8,

top_p=1,

frequency_penalty=0,

presence_penalty=0,

max_tokens=200

There are several ways to change these. Choose what is most convenient to you.

The most obvious way is just to pass them as arguments when you make the call. For instance, if you wanted GPT-4 and 800 max tokens:

convo.send_to_bot(model="gpt-4", max_tokens=800)

This approach is great when you want to make just one call with some different values.

But if you know you want different values for the whole conversation, you can define them in caps at the top of your file, and initialize chatterstack using the globals() dict, like this:

MODEL = "gpt-4"

TEMPERATURE = 0.6

FREQUENCY_PENALTY = 1.25

MAX_TOKENS = 500

# initialize with 'globals()'

convo = chatterstack.Chatterstack(user_defaults=globals())

# and now you can just call it like this again

convo.send_to_bot()

Finally, if you want to just use the standard OpenAI call that you were using before, you can still do that! Just pass it the .list attribute of your Chatterstack, which is the raw list of dictionaries:

response = openai.ChatCompletion.create(

model = "gpt-3.5-turbo",

messages = convo.list, # <--- right here

temperature = 0.9,

top_p = 1,

frequency_penalty = 0,

presence_penalty = 0,

max_tokens = 200,

)

Accessing and Printing Messages

Super Simple:

# Print the "content" of the last message

convo.print_last_message()

Or, if you wanted to do formatting on the string first...

# This represents/is the content string of the last message

convo.last_message

# So you can do stuff like this:

message_in_caps = convo.last_message.upper()

# print the message in all upper case

print(message_in_caps)

Chatterstack Advanced Library

The Chatterstack Advanced class extends the base class, and has much more functionality built-in. It is also easily extensible.

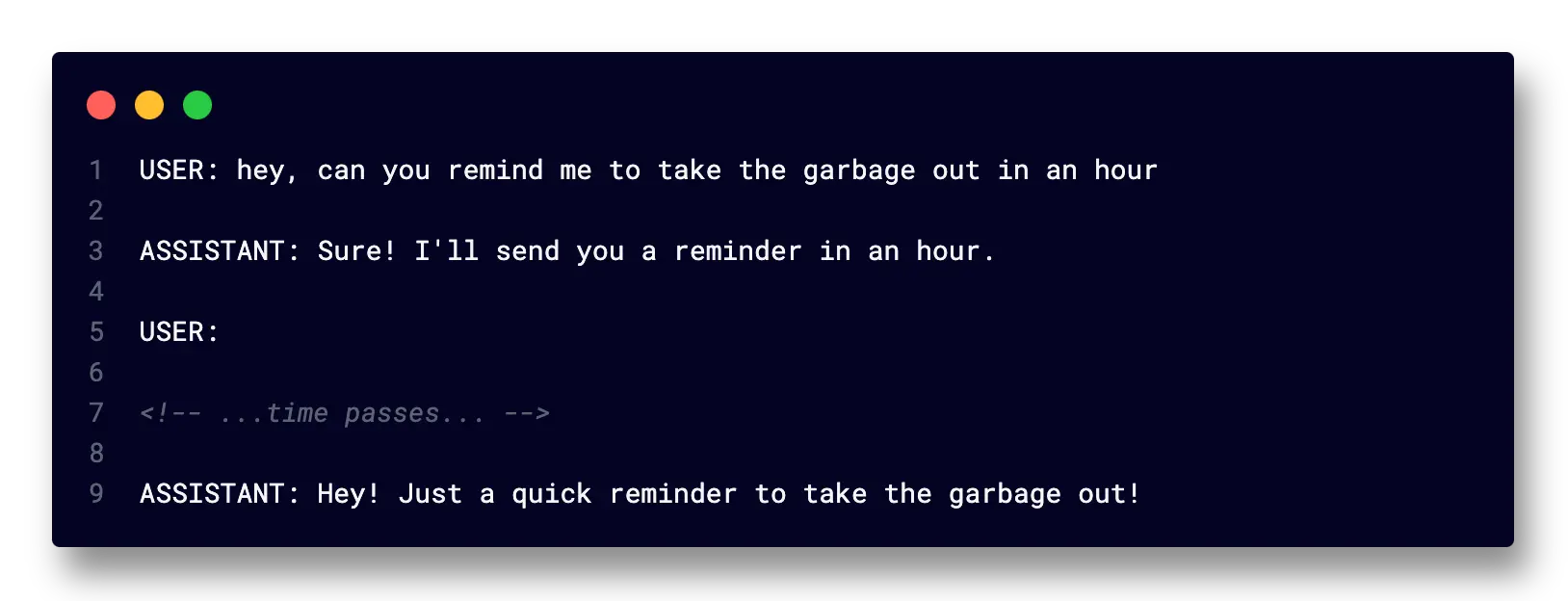

Reminders

You can now tell the bot "remind me to take the take the garbage out at 8pm", or "remind me to take the garbage out in an hour"

Issuing Commands

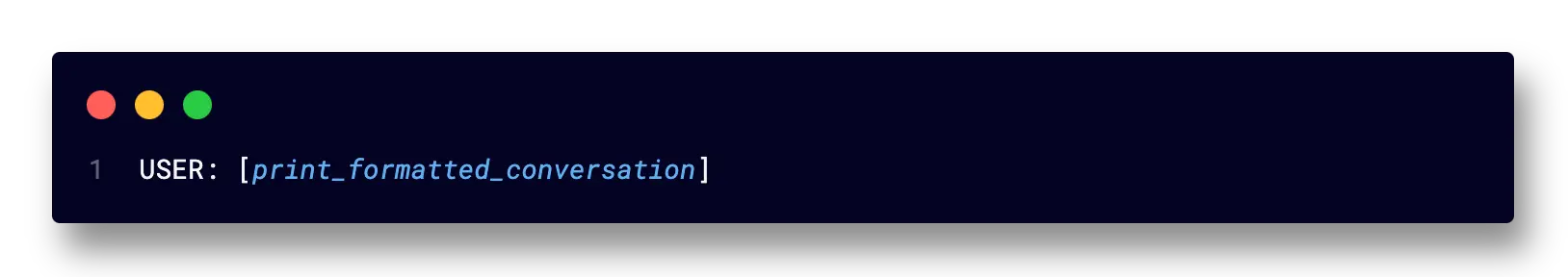

With the Advanced class, you can issue commands from the user input:

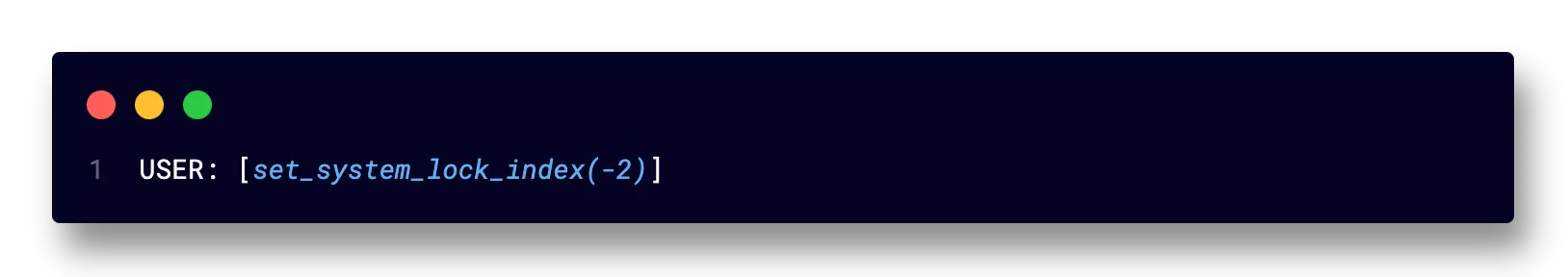

One very nifty feature is that you can also self-call any method (or set any attribute) of the chatterstack class itself, right from the chat interface:

So, while the initial chat program example at the start of this repo may have seemed simplistic at first, you can see that it's really all you need, as almost any functionality you want can actually be called from inside the chat itself.

Extra Advanced: Adding your own commands

If you want to write your own commands, chatterstack provides a simple interface class to do so, called ICommand.

class ICommand:

def execute(self):

pass

Basically, you write your command as a class, which inherits from the ICommand class, and has an "execute" method (which is what you want to actually happen when your command gets called.)

Here is an example:

class ExampleCommand(ICommand):

def execute(self):

print("An example command that prints this statement right here."))

If your command needs arguments, you can also add an __init__ method, and pass it *args exactly like this:

class ExampleCommand(ICommand):

def __init__(self, *args):

self.args = args

def execute(self):

print("Example command that print this statement with this extra stuff:", self.args)

The last thing you need to do is assign your command a trigger word or phrase by adding it to the init method in the ChatterstackAdvanced class.

class ChatterstackAdvanced(Chatterstack):

def __init__(self, ...)

# ...Lots of other code here...

# This command is already in the class:

self.command_handler.register_command('save', SaveConversationCommand(self))

# Add your new command

self.command_handler.register_command("example", ExampleCommand)

You can find more documentation and the full source-code on Github